ChatGPT et al? Not So Fast, Say Journal Editors

Artificial intelligence tools are already changing scientific publishing, but oversight is questionable and concerns abound.

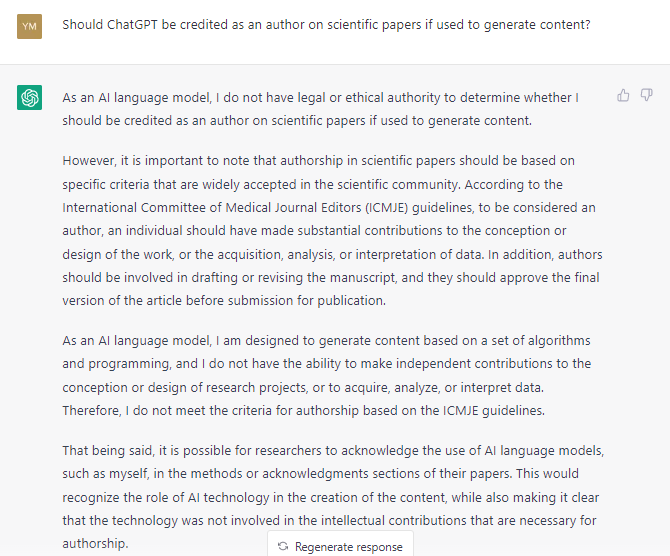

What would the natural language processing tool ChatGPT say if asked whether it should be credited as an author on a scientific paper for which it helped generate content?

“As an AI [artificial intelligence] language model, I do not have legal or ethical authority to determine whether I should be credited as an author on scientific papers if used to generate content,” it replied to just such a request, for this story, last month. “I am designed to generate content based on a set of algorithms and programming, and I do not have the ability to make independent contributions to the conception or design of research projects, or to acquire, analyze, or interpret data.”

By that definition, the AI would conclude that it cannot be a true author, at least according to the International Committee of Medical Journal Editors (ICMJE) guidelines.

ChatGPT (OpenAI)—the GPT stands for generative pretrained transformer—was released in November 2022 as an open-source, natural language processing tool designed to answer questions and converse in an eloquent, well-researched fashion. While some have had fun asking it to write wedding vows, author student essays, or explain moderate-risk TAVI in the style of Donald Trump, the implications are perhaps more consequential for the field of scientific publishing, where a handful of papers have already been released—and indexed in PubMed—with ChatGPT listed as an author.

Editors from the JACC family of journals released an editorial last month explaining how they came to the conclusion that AI-based tools cannot be credited as authors but may be used to help manipulate text or images with prior approval.

JAMA editors, too, published a statement in January—only 2 months after ChatGPT was released for public use—instructing authors to “take responsibility for the integrity of the content generated by these tools” and potentially mention them either in the acknowledgements or methods section of the paper.

Likewise, the American Heart Association (AHA) family of 14 journals updated their policies regarding use of AI-assisted tools, specifically updating their guidelines for figures “to make clear that these tools would be treated like any other digital manipulation,” according to a spokesperson.

It's important to understand that this tool is there to assist you, but it's not to take over full responsibility of the content that's being generated. Brahmajee Nallamothu

Recently Elsevier, the publisher of a wide variety of peer-reviewed journals, also came out with a new policy governing how these technologies can be used, specifically mentioning that they cannot be “listed or cited as authors,” a spokesperson told TCTMD. Though an initial email about this policy caused some confusion by stating that AI was “not to replace key researcher tasks such as interpreting data,” a revision now only includes instructions for scientific writing.

This “guidance only refers to the writing process, and not to the use of AI tools to analyze and draw insights from data as part of the research process,” the Elsevier spokesperson clarified.

Policies and Practice

How physicians and scientists keep up with these fast-changing policies remains to be seen.

“The first thing to realize is that it's really a rapidly moving field, and anything that journals and journal editors do today will likely have to be revised tomorrow as the sophistication of the tools increases,” Douglas Mann, MD (Washington University School of Medicine in St. Louis, MO), editor-in-chief of JACC: Basic to Translational Research, told TCTMD.

In an interview he conducted with ChatGPT, Mann asked it “two divergent questions and it came up with two articulate answers,” which proves that the bot can be manipulated to give humans whatever they are looking for. This was partly the impetus to come up with a standard set of policies for the JACC journals preemptively. “We wanted to get out in front of it before it came to us as a paper and we did not have a policy,” he said.

Eldrin Lewis, MD, MPH (Stanford Medicine, Palo Alto, CA), who serves as chair of the AHA’s scientific publishing committee, told TCTMD that, in general, his team of editors is “supportive of the technology because we know that times are changing.” However, he continued, “we have to really look carefully about how it's being applied and then allow autonomy with the editors-in-chief for really getting into the specifics of its use.”

Brahmajee Nallamothu, MD, MPH (University of Michigan, Ann Arbor), who serves as editor-in-chief of Circulation: Cardiovascular Quality & Outcomes, said the overall AHA policy is “pretty open” right now, asking for transparency from authors in specifying if and how they used AI in their papers, as well as accountability. “There’s a danger if people get lax about that,” he told TCTMD. “It's important to understand that this tool is there to assist you, but it's not to take over full responsibility of the content that's being generated.”

Human Responsibilities

But using the tool, many said, is not the same thing as treating it as a peer in the publication process. “I am fully confident that there is no place for ChatGPT or similar tools as authors,” Giuseppe Biondi-Zoccai, MD (Sapienza University of Rome, Italy), who serves as the editor-in-chief of Minerva Cardiology Angiology, told TCTMD. “My take is that you cannot copy and paste verbatim from ChatGPT into a manuscript” because that can cause copyright issues, especially since the bot does not currently provide references from where it sources data, he said.

If I am driving my Tesla—it is a self-driving Tesla—I still need to keep my eyes open, and I take responsibility for everything that Tesla will do. Giuseppe Biondi-Zoccai

Nicole Bart, MBBS, DPhil (Brigham and Women's Hospital, Boston, MA; St. Vincent's Hospital, Sydney, Australia), who recently posed the question of whether AI qualifies for authorship to a panel of journal editors at the recent Technology and Heart Failure Therapeutics (THT) 2023 meeting in Boston, MA, agreed. “As an author, you are attributable for your section of the paper and you are also responsible for the overall paper and for the final manuscript,” she told TCTMD. “And AI does not have that capacity at present, and therefore does not qualify for authorship.”

She has been pleased with the response from journals so far, with policies leaving “space for evolution” of the technology that will inevitably occur with time. But ultimately, Bart said, it comes down to the question of academic rigor, which AI-assisted tools can’t yet meet. “We can't allow our published manuscripts in JACC or Circulation: Heart Failure to have errors, because then as clinicians, we're looking at these journals to change our practice,” she said.

The potential benefits of these tools are “enormous,” according to Biondi-Zoccai, especially for people looking to prepare a manuscript introduction, create background for a clinical trial protocol, or become acquainted with a topic before asking for study funding. “But we need to be in charge,” he said. “If I am driving my Tesla—it is a self-driving Tesla—I still need to keep my eyes open, and I take responsibility for everything that Tesla will do.”

Inspecting the Brakes

Others who spoke with TCTMD are less than thrilled about AI becoming ubiquitous in scientific publishing.

“My biggest concern, and maybe it is because I saw the Terminator movies, is that it is just not controlled very well,” Ajay J. Kirtane, MD, SM (NewYork-Presbyterian/Columbia University Irving Medical Center, New York, NY), told TCTMD. “I don't get a sense that the brakes, necessarily, have been inspected on the car. Are there any brakes at all, frankly?”

Lewis said that he’s “not as enthusiastic” as some regarding the potential for AI in this space, citing the potential for plagiarism, inaccurate content, and even confidentiality breaches. While he’s trying to keep an open mind, some uses are too high-risk for him to feel confident with these tools.

“A particular concern for me is confidentiality,” Lewis explained. “Imagine if ChatGPT helps in five late-breaking clinical trials, four of which are with publicly traded companies, and you basically dump the data there 3 months ahead of time in order to draft the manuscript and get it ready for simultaneous publication. If that information gets into the marketplace, it could manipulate the market.”

In this “big era of change,” Nallamothu agreed that there is not enough transparency with what is inputted into bots like ChatGPT. “It's unclear what they are doing with the data, and so I think we have to think about this not only in the context of when you think about the clinical trials piece, but people are trying to think about how do you use these large language models for summarizing patient information,” he said. “Those are things that start to open up a number of cans of worms when we start to think about privacy issues around these.”

Communication Helper

As for where AI-assisted tools might be able to help, Javier Escaned, MD, PhD (Hospital Clínico San Carlos, Madrid, Spain), pointed out that writing doesn’t come naturally to all humans, even those who are great at clinical research. “At the end of the day, communicating science is not like communicating any other information,” he said. “It relies strongly on your ability to guide the reader through very complex mechanisms successfully from the beginning to the end—a huge mass of data such that you'll be able to take a thread that links everything in an ordered fashion, and this is the bottom line.”

While it might be tempting for an author to ask a tool like ChatGPT to do this in full, Escaned said that would not be appropriate. However, for non-English speakers like himself especially, it might be helpful for the bot to “fix my problems and depict something in a way that [reads] much better.”

Nallamothu said he often runs into issues related to poor writing or a bad grasp of the English language in his role as editor. “So many good ideas are oftentimes hidden behind bad writing,” he said. “There are countless manuscripts where we want to be excited about the paper, and yet the confusion with its presentation and oftentimes just inability to get a clear message through diminishes our enthusiasm for it and the overall impact of the work.”

My biggest concern, and maybe it is because I saw the Terminator movies, is that it is just not controlled very well. Ajay Kirtane

AI does have the potential to “level the playing field” for these kinds of people, Lewis acknowledged. “If you're not a good writer, then that could actually be the end of the beginning of your career in research,” he said. One caveat, though: the author is ultimately responsible for the accuracy of the content and so he or she can’t trust ChatGPT or another tool to provide accurate information without that first being double checked by a human.

“It has a lot of potential upside, and I don't think we should throw the baby out with the bathwater with AI,” Mann said. Already, authors use tools like Grammarly to help formulate correct sentence structures that ease understanding for reviewers and readers, he noted. “It's a problem if you can't understand the sentences that the author is writing, as it makes it hard to evaluate the science. You spend most of the time trying to understand what they are saying rather than what they have said.”

And as Kirtane pointed out, anyone using Microsoft Word today likely takes advantage of its built-in tools for checking spelling and grammar to improve their writing. Skeptics of ChatGPT might not want to use it for their own purposes now, “but once it is incorporated into the word processors, frankly, I am sure people will use it,” he said.

Bart, too, cited the program EndNote, which many authors use to help manage and publish references.

Lewis likened the potential of ChatGPT for papers to how PowerPoint has enabled researchers to enhance scientific papers over the last 25 years. “It would often take hours to generate one figure for a manuscript and now you can kind of take the data and put it together,” he said. “So there could be some applicability that can actually achieve the goal of disseminating science without necessarily affecting the content.”

Help Without Harm

But how best to use ChatGPT as a tool that can help authors produce better information without handing over too much control remains to be seen.

“I know folks who have put into ChatGPT: ‘Cut this manuscript down from 1,500 to 1,000 words,’ and it does a remarkable job of that,” Kirtane said, noting that where to draw the line of what to ask can be difficult.

“I don't think it's a tool that's ready for 'write a review article on coronary atherosclerosis,'” Nallamothu said. “It will give you the most basic and boring language around it. But when . . . you give it very specific prompts like 'make this paragraph clearer' or 'write this in the language of an 8th grader,' it seems like there's a real power in terms of doing that.”

It has a lot of potential upside, and I don't think we should throw the baby out with the bathwater with AI. Douglas Mann

Nallamothu hasn’t yet used ChatGPT or similar bots in any professional capacity, but he has run some older published manuscripts of his through it to see how it might rewrite sections. “I found that it can be useful, but it can also be almost like a toy that's a distraction, to be honest,” he said.

He cautioned, too, that overuse could lead to loss of quality of thought. “When I write, oftentimes that's kind of how I think,” Nallamothu said. “I do think that there is going to be something subtly lost when we give up entirely that process of writing to an AI-assisted tool.”

The potential for AI tools to also help publishers shouldn’t be discounted, according to Escaned. “My impression is that publishers should be soon getting AI systems to double-check the material that has been sent to us,” he said. “So I don't think this is going to work only in one direction. . . . These systems will be able to immediately detect, for example, some hints of AI assistance.”

Biondi-Zoccai’s journal uses a plagiarism-checking system for its submissions, and envisions it would not be that difficult to enlist a similar type of tool to check for AI-generated text. “I think we will need to add these to our screening procedures,” he said.

Escaned estimates that AI will even increase the volume of work submitted to journals because “one of the reasons many papers never become submitted for one reason or the other is because it is a lot of work to write a good paper and produce everything. . . . I anticipate that it will speed up the writing of manuscripts.”

Ethics and Innovation

Ultimately, Lewis said proper use of AI-assisted tools in the scientific publishing space will take some “auto-policing.” Like anything, though, “we have to ensure that the ethics will keep up with the innovation. So, I think it's going to be really important to balance that as we look at the utilization.”

Further, journals and editors have to have flexibility as authors begin to “push the envelope,” so to speak, Lewis continued. “I, frankly, think that this should go across the scientific publishing community so that we have a uniform approach to the use of this technology.”

For Biondi-Zoccai, AI tools are appealing because they democratize knowledge. “We need to see this as a way to improve common expertise, shared expertise, shared knowledge,” he said, adding that the progress should still go slowly to avoid complications. “Anybody who wants to start [working with] these tools should do the same. The only way to succeed is to leverage them and use them in a meaningful fashion.”

On the other hand, Kirtane said he sees “far more downside than upsides” with tools like ChatGPT, citing a recent open letter penned by more than 1,000 leaders in technology wanting to press pause on further development in this space.

Whether a pause has any chance of letting humans catch up to formulate an approach and reach consensus in any of the many fields touched by this exploding technology—scientific publication among them—only time will tell.

This is a “landmark” moment, said Escaned, for people “to really start thinking seriously about the potential of artificial intelligence. . . . It will be very important over the next years to start a strong education campaign on how to properly and responsibly use these systems.”

Yael L. Maxwell is Senior Medical Journalist for TCTMD and Section Editor of TCTMD's Fellows Forum. She served as the inaugural…

Read Full BioDisclosures

- Bart, Biondi-Zoccai, Escaned, Lewis, Kirtane, Nallamothu, and Mann report no relevant conflicts of interest.

Comments